AI Alignment

Published:

On the alignment front, our group has focused on developing innovative methods to improve AI alignment without requiring deep technical expertise. One such idea is Alignment via Conversation, where users can engage in a natural dialogue with an AI agent to explain their alignment goals, and the agent takes care of the rest, including fine-tuning, prompt engineering, etc. Also, we introduced a standardized taxonomy called TELeR for designing and categorizing prompts in LLM benchmarking, enabling consistent comparisons across studies and enhancing understanding of how prompt design affects AI performance on complex tasks.

Project 1: Alignment via Conversation (Funded by NSF)

In open-domain dialog systems, it is often uncertain how the end user would expect a new conversation to be grounded and structured. Therefore, the ideal solution must engage in a pre-conversation with the user about their expectations and preferred knowledge base for grounding purposes before the actual conversation happens. In other words, a “Conversation about Conversation”, i.e., a “Meta-Conversation”, should happen with the user beforehand.

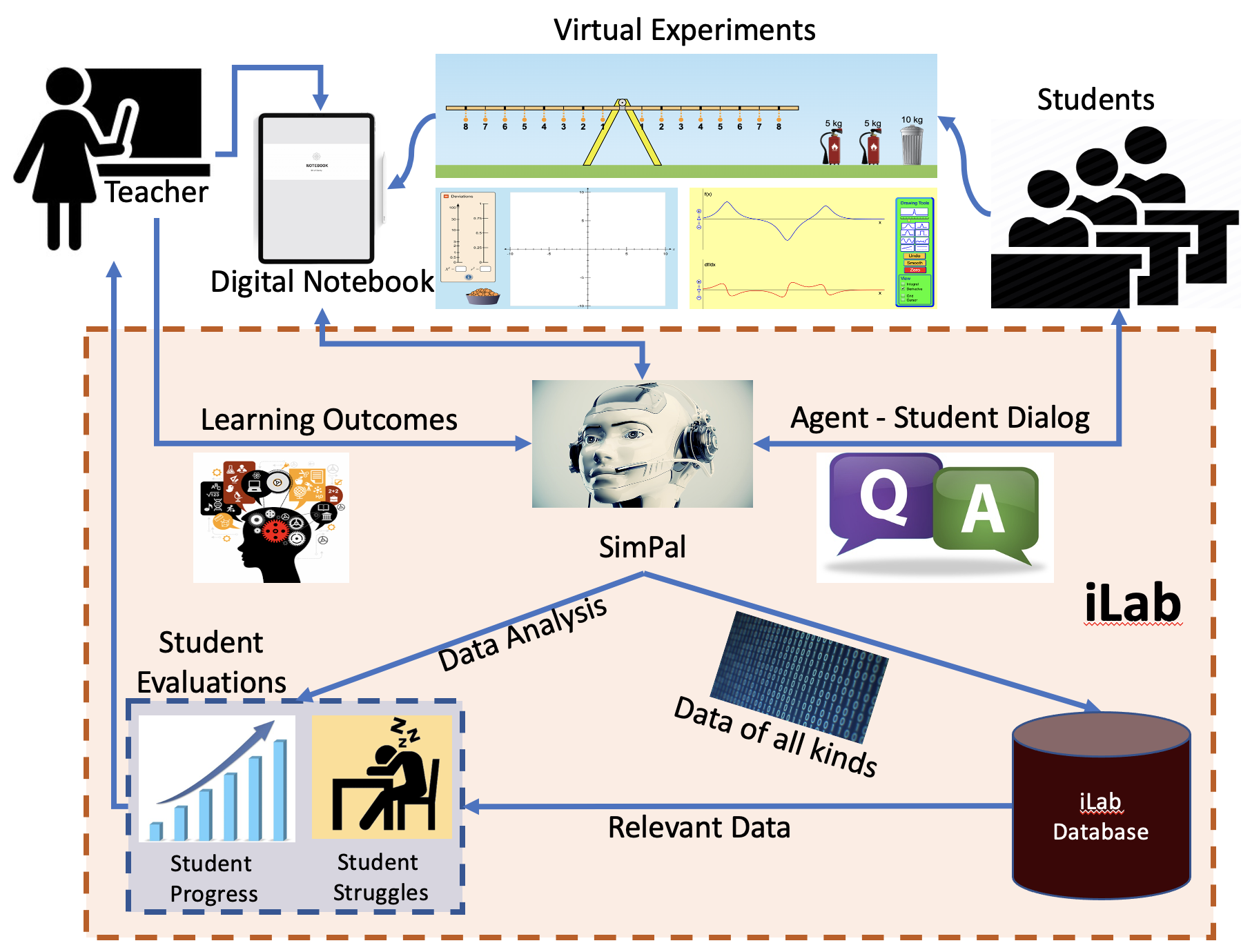

This is an ongoing project in my lab, where we are developing a “Meta-Conversation Framework” to create dialog-based interactive laboratory experiences for middle school science students and teachers in the context of simulation-based science experiments.

Based on this idea, we are currently developing an Artificial Intelligence-based Conversational Framework to create dialog-based interactive laboratory experiences for middle school science students and teachers in the context of simulation-based science experiments. A key component of the framework is an intelligent conversational agent (SimPal) that actively learns from teachers through a “Meta-Conversation” to solicit their instructional goals associated with simulation experiments and store them using a computational representation. In other words, the school teacher actively teaches the machine/agent what the instructional goals are for a particular scientific experiment in plain natural language. The agent then uses this representation to facilitate and customize an interactive knowledge-grounded conversation (powered by state-of-the-art Large Language Models) with students as they run experiments to enhance their learning experience. Unlike existing intelligent tutoring systems and pedagogical conversational agents, SimPal can work with any off-the-shelf third-party simulations, a unique feature of this project enabled by our proposed Meta-Conversation technique.

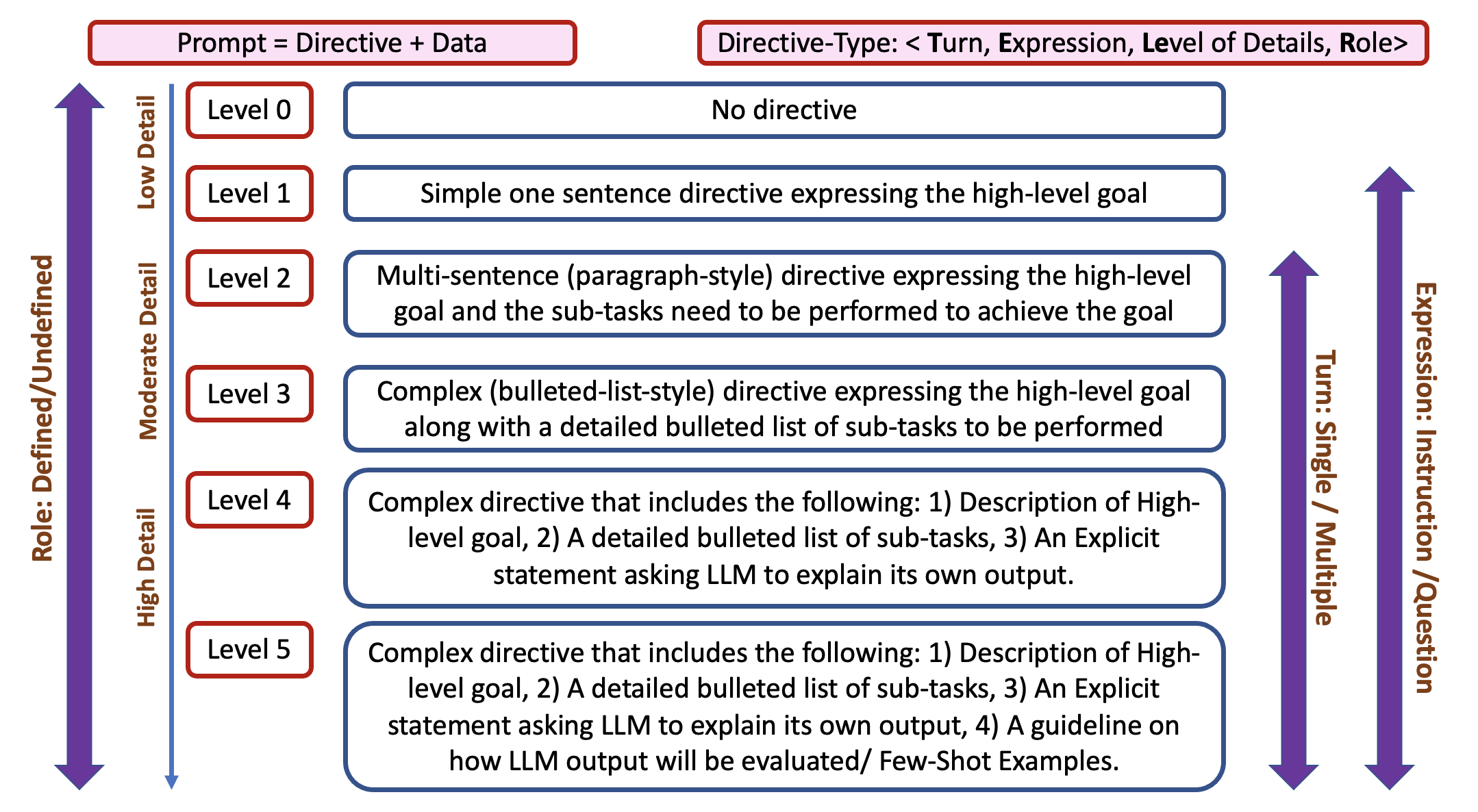

Project 2: TELeR Taxonomy: Alignment via Prompt Engineering

Conducting benchmarking studies on LLM alignment is challenging because of the large variations in LLMs’ performance when different prompt types/styles are used and different degrees of detail are provided in the prompts. To address this issue, we propose a general taxonomy, called TELeR, that can be used to design prompts with specific properties in order to perform a wide range of complex tasks. This taxonomy allows future benchmarking studies to report the specific categories of prompts used as part of the study, enabling meaningful comparisons across different studies. Also, by establishing a common standard through this taxonomy, researchers will be able to draw more accurate conclusions about LLMs’ performance on a specific complex task.

Using TELeR Taxonomy, we have already conducted multiple benchmarking studies on different goal tasks, e.g., Summarization, Question Generation, and Cognitive Bias Detection.